- Histograms of RGB

- KMeans Histograms

- Autoencoder KMeans

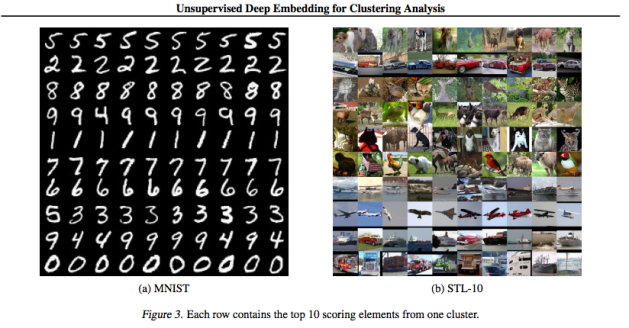

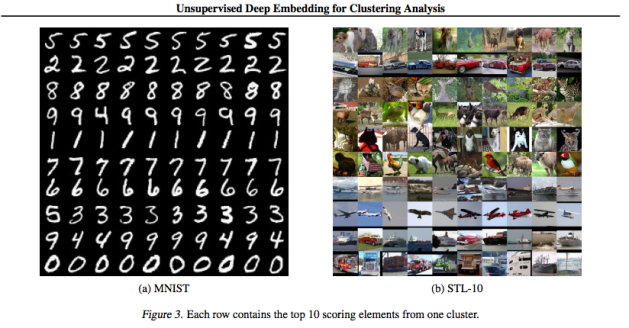

- Unsupervised Deep Embedding for Clustering Analysis (DEC)

I have 50000 photos that I would like to label for training a classifier. I guess I could represent each image by raw pixels or RGB values but how do I divide them into K groups in terms of inherent latent semantics? Solutions 1, 2 and 3.

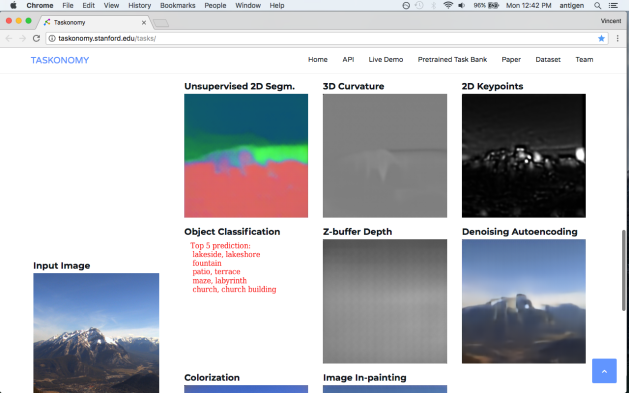

The traditional way, you first extract feature vectors according to domain-specific knowledge and then use a clustering algorithm on the extracted features.

My colleague said I had to use deep learning so I researched it and found DEC. A unified framework which can directly cluster images with linear performance. This new category of clustering algorithms using Deep Learning is typically called Deep Clustering.

From the paper:

Clustering is central to many data-driven application domains and has been studied extensively in terms of distance functions and grouping algorithms. Relatively little work has focused on learning representations for clustering. In this paper, we propose Deep Embedded Clustering (DEC), a method that simultaneously learns feature representations and cluster assignments using deep neural networks. DEC learns a mapping from the data space to a lower-dimensional feature space in which it iteratively optimizes a clustering objective. Our experimental evaluations on image and text corpora show significant improvement over state-of-the-art methods.

Click to access 1511.06335.pdf

https://github.com/fferroni/DEC-Keras/blob/master/keras_dec.py

https://github.com/XifengGuo/DEC-keras/blob/master/DEC.py

Click to access ICONIP17-DCEC.pdf

https://arxiv.org/abs/1709.08374

https://github.com/panji1990/Deep-subspace-clustering-networks